niftynet.network.dense_vnet module¶

-

class

DenseVNet(num_classes, hyperparams={}, w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, acti_func='relu', name='DenseVNet')[source]¶ Bases:

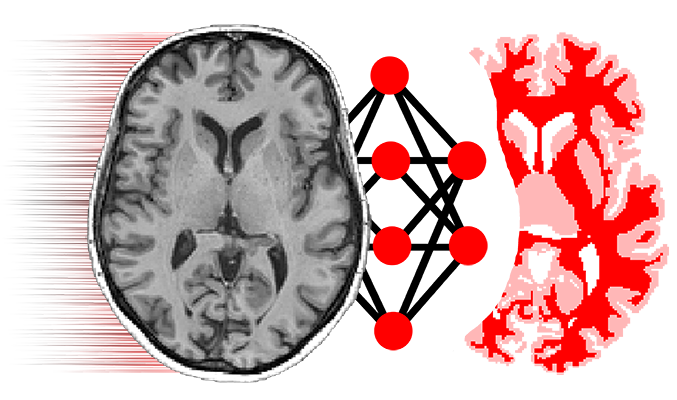

niftynet.network.base_net.BaseNet- implementation of Dense-V-Net:

- Gibson et al., “Automatic multi-organ segmentation on abdominal CT with dense V-networks” 2018

### Diagram

DFS = Dense Feature Stack Block

- Initial image is first downsampled to a given size.

- Each DFS+SD outputs a skip link + a downsampled output.

- All outputs are upscaled to the initial downsampled size.

- If initial prior is given add it to the output prediction.

- Input

- –[ DFS ]———————–[ Conv ]————[ Conv ]——[+]–>

- | | |

- —–[ DFS ]—————[ Conv ]—— | |

- | |

- —–[ DFS ]——-[ Conv ]——— |

- [ Prior ]—

The layer DenseFeatureStackBlockWithSkipAndDownsample layer implements [DFS + Conv + Downsampling] in a single module, and outputs 2 elements:

- Skip layer: [ DFS + Conv]

- Downsampled output: [ DFS + Down]

-

class

DenseFeatureStackBlock(n_dense_channels, kernel_size, dilation_rates, use_bdo, name='dense_feature_stack_block', **kwargs)[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerDense Feature Stack Block

- Stack is initialized with the input from above layers.

- Iteratively the output of convolution layers is added to the feature stack.

- Each sequential convolution is performed over all the previous stacked channels.

Diagram example:

feature_stack = [Input] feature_stack = [feature_stack, conv(feature_stack)] feature_stack = [feature_stack, conv(feature_stack)] feature_stack = [feature_stack, conv(feature_stack)] … Output = [feature_stack, conv(feature_stack)]

-

class

DenseFeatureStackBlockWithSkipAndDownsample(n_dense_channels, kernel_size, dilation_rates, n_seg_channels, n_down_channels, use_bdo, name='dense_feature_stack_block', **kwargs)[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerDense Feature Stack with Skip Layer and Downsampling

- Downsampling is done through strided convolution.

- —[ DenseFeatureStackBlock ]———-[ Conv ]——- Skip layer

——————– Downsampled Output

See DenseFeatureStackBlock for more info.