niftynet.network.vae module¶

-

class

VAE(w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, name='VAE')[source]¶ Bases:

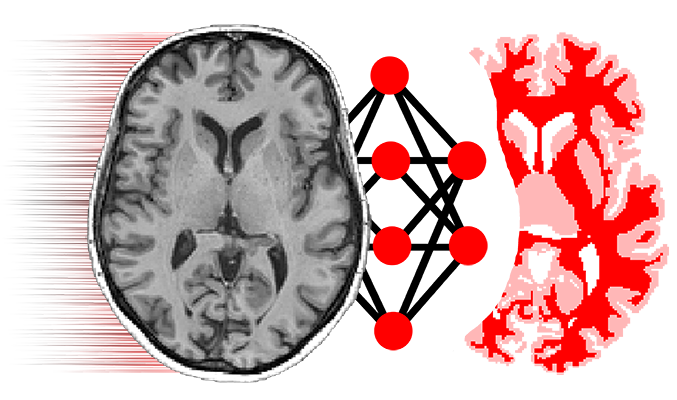

niftynet.layer.base_layer.TrainableLayerThis is a denoising, convolutional, variational autoencoder (VAE), composed of a sequence of {convolutions then downsampling} blocks, followed by a sequence of fully-connected layers, followed by a sequence of {transpose convolutions then upsampling} blocks. See Auto-Encoding Variational Bayes, Kingma & Welling, 2014. 2DO: share the fully-connected parameters between the mean and logvar decoders.

-

class

ConvEncoder(denoising_variance, conv_output_channels, conv_kernel_sizes, conv_pooling_factors, acti_func_conv, layer_sizes_encoder, acti_func_encoder, serialised_shape, w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, name='ConvEncoder')[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerThis is a generic encoder composed of {convolutions then downsampling} blocks followed by fully-connected layers.

-

class

GaussianSampler(number_of_latent_variables, number_of_samples_from_posterior, logvars_upper_bound, logvars_lower_bound, w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, name='gaussian_sampler')[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerThis predicts the mean and logvariance parameters, then generates an approximate sample from the posterior.

-

class

ConvDecoder(layer_sizes_decoder, acti_func_decoder, trans_conv_output_channels, trans_conv_kernel_sizes, trans_conv_unpooling_factors, acti_func_trans_conv, upsampling_mode, downsampled_shape, w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, name='ConvDecoder')[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerThis is a generic decoder composed of fully-connected layers followed by {upsampling then transpose convolution} blocks. There is no batch normalisation on the final transpose convolutional layer.

-

class

FCDecoder(layer_sizes_decoder, acti_func_decoder, w_initializer=None, w_regularizer=None, b_initializer=None, b_regularizer=None, name='FCDecoder')[source]¶ Bases:

niftynet.layer.base_layer.TrainableLayerThis is a generic fully-connected decoder.